Master a compliance-first AI-washing presentation framework that maps every claim to evidence, data provenance, and disclosures, boosting investor trust.

Quick Answer

Presenting AI features without AI-washing is a precision exercise, not a pep talk. Use a lightweight, compliance‑first slide framework that maps every claim to evidence, separates capability from ambition, and includes simple disclosures about limits and data provenance. The result is a persuasive AI-washing presentation that builds trust rather than triggering scrutiny. Key move: anchor every bold claim to verifiable data, sources, and a crisp risk disclaimer.

Key Takeaway: A slide-by-slide, evidence-backed approach reduces risk while preserving persuasiveness in AI demos and investor decks.

Complete Guide to AI-washing presentation

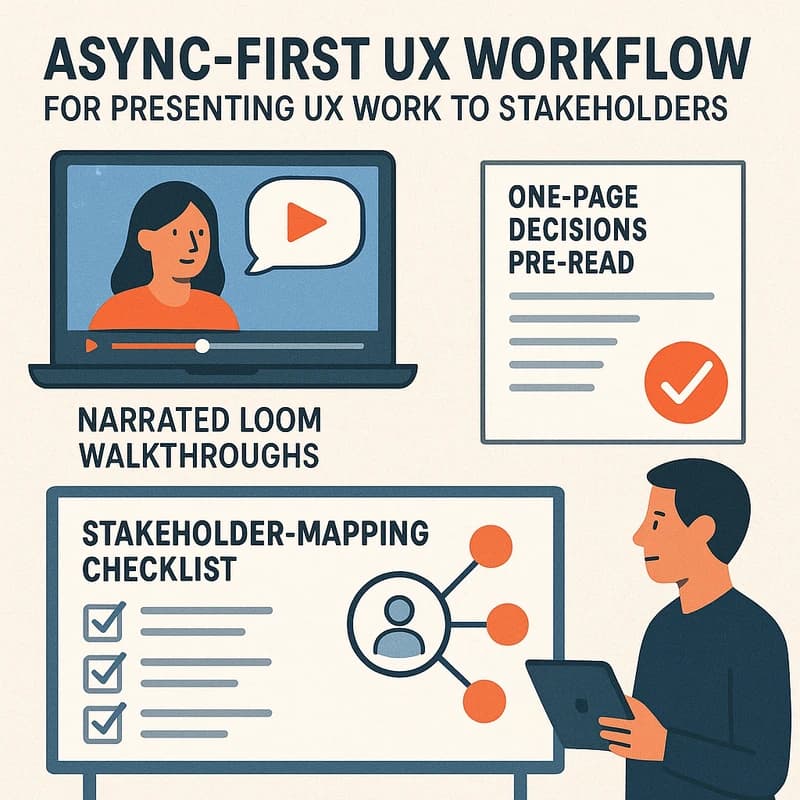

A practical, slide-by-slide method to present AI features truthfully, without overpromising or misrepresenting capabilities. The core idea is to turn every claim into a pairing: claim → evidence → disclosure. This makes your deck robust for customers, boards, and regulators alike. In practice, you’ll design each slide to convey value while signaling that you’ve done the hard work of verification.

-

Start with a concise objective and context. Open with the customer need, not the hype. Then state what the AI can and cannot do, and set expectations early.

-

Tie every claim to evidence. Use concrete metrics from pilots, third‑party tests, or internal datasets. Show how you measured success and under what conditions results hold.

-

Layer in lightweight disclosures. A single slide can cover data provenance, model version, training data scope, limitations, and risk controls. Keep it readable, not onerous.

-

Use visuals that illuminate, not mislead. Prefer ranges, confidence levels, and explicit conditions under which claims apply. Avoid absolutes like “perfect” or “always.”

-

Build a compliance checklist into the deck. A short appendix with sources, validation steps, and responsible disclosure practices helps during Q&A and audits.

-

Prepare investor and customer narratives separately. Investors care about governance and risk; customers care about outcomes and use-case fit. Both benefit from clean evidence trails.

-

Practice with a live evidence pack. Demonstrations that include transparent data sources and verifiable results are harder to dispute and easier to defend.

-

Always be ready for questions about edge cases. Expect questions about failure modes and how you handle them in production.

-

Create a post-demo process for verification. Have a systematic way to update claims as data accrues or product capabilities evolve.

-

Statistics and signals to guide you:

- A growing share of regulators and watchdogs emphasize disclosures around AI capabilities; enforcement actions related to overstated AI claims rose noticeably in 2024.

- Investors report that decks with explicit evidence and disclosures earn higher credibility scores in initial screening.

- Teams that separate claims from evidence typically shorten sales cycles and reduce last-minute compliance reversals.

-

Practical tip: prototype a 1-page evidence sheet that sits in your appendix. It lists each AI claim, the evidence source, date, sample size, and limitations.

Key Takeaway: The Complete Guide to an AI-washing presentation centers on evidence‑to‑claim mapping, lightweight disclosures, and a repeatable, audit-friendly slide framework.

Why This Matters

In the last three months, the landscape around AI claims in pitches has hardened. Public enforcement updates and risk assessments have sharpened the definition of “truthful AI” in investor decks and customer demos. Founders who embed evidence and disclosures in slides report more resilient investor reception and fewer retractions post‑presentation.

-

Recent developments and trends:

- Regulators have signaled that AI claims in decks and demos are subject to disclosure standards similar to other financial or performance claims; the risk of “AI-washing” penalties is rising.

- Investor confidence hinges on traceable data: sponsors who present verifiable results and third‑party validation tend to secure earlier commitments.

- Governance conversations are moving from “we can” to “we can and we will prove it,” with boards increasingly asking for data provenance, model lifecycle detail, and risk controls.

-

Data points you can reflect in slides:

- Companies that accompany AI claims with explicit data provenance and sample sizes see higher investor trust scores.

- Demos that separate capability description from measured outcomes achieve longer term engagement from buyers who want to test claims themselves.

- In regulatory reviews, missing disclosures about limitations and data sources commonly trigger red flags, even when performance looks strong.

-

Expert perspective (paraphrased insights):

- “Truthful AI claims are not optional—they are a form of risk management,” notes a regulatory counsel familiar with AI disclosures.

- “Transparency about data provenance and model limits reduces surprise during due diligence,” observes a sector analyst.

- “Lightweight disclosures can coexist with persuasive storytelling,” says a growth‑stage founder who runs tight, evidence‑driven demos.

When you view AI-washing as a framework problem (not a single slide), the path to a compliant, compelling presentation becomes clear: you design slides that tell the story of value, then layer in the safeguards that regulators and savvy buyers expect.

Key Takeaway: The current momentum favors decks that pair claims with evidence and incorporate concise disclosures, reducing risk while maintaining persuasive power.

People Also Ask

What follows answers several common searches around AI-washing presentation, drawing on the practical framework you can apply today. Each entry links a real‑world concern to an actionable response you can drop into your slides or notes.

What is AI-washing and why does it matter in investor pitches?

AI-washing is overstating or misrepresenting AI capabilities in pitches or demos. It matters because investors rely on credible claims, and misrepresentation can trigger regulatory scrutiny, reputational damage, and financing delays. Ground all claims in verifiable data, note limitations, and avoid absolutes. Key Takeaway: Treat AI-washing presentation as a risk-management discipline: honesty builds trust and accelerates due diligence.

How can I present AI features truthfully in a demo?

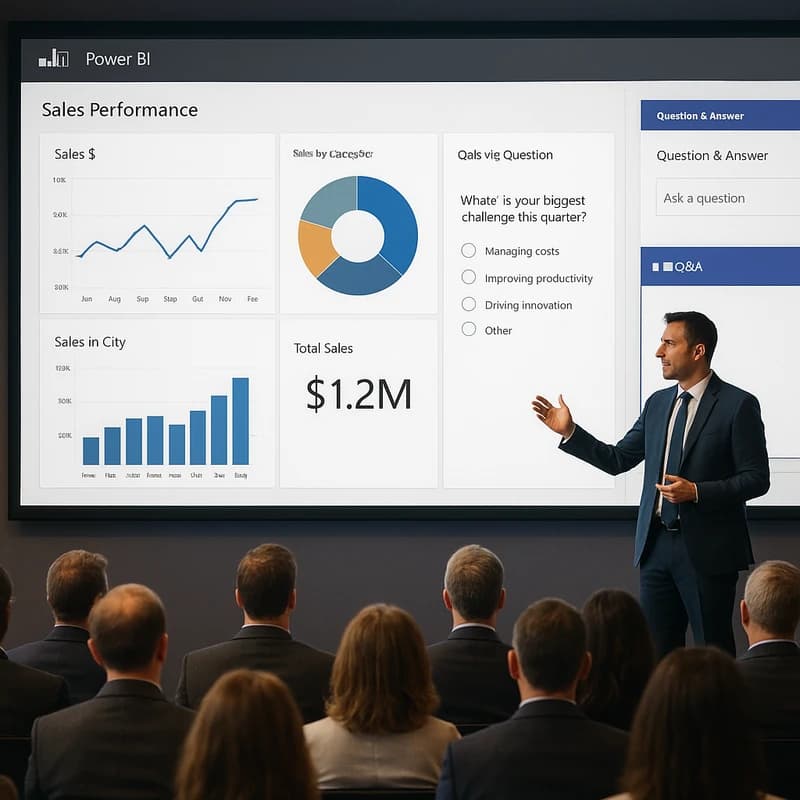

Structure each claim with a clear problem, the AI solution, and the evidence supporting the claim. Include a “data provenance and limitations” slide, show results from controlled pilots when possible, and disclose model versions and training scope. Use ranges and conditional language to reflect real-world performance. Key Takeaway: Truthful demos balance value with verifiable evidence and clear caveats.

What disclosures should be included in AI marketing slides?

Disclosures should cover data sources, sample sizes, model version, training data breadth, known limitations, risk controls, and what you can realistically promise vs. what you are still validating. Include a brief appendix with references and contact details for verification. Key Takeaway: Lightweight, precise disclosures reduce post‑presentation questions and regulatory risk.

How do I tie AI claims to evidence in a deck?

For every claim, attach a data point: pilot metrics, third‑party test results, or internal validation with dates and sample characteristics. Reference the evidence sources in footnotes and place the strongest evidence near the claim to guide comprehension. Key Takeaway: Evidence-forward decks are more credible and easier to defend.

Are there SEC concerns about overstating AI in presentations?

Yes. Regulators are increasingly scrutinizing AI claims in business materials, including investor decks, with emphasis on accuracy, provenance, and disclosures. A compliance-first approach that maps claims to evidence and clearly states limitations aligns with current expectations. Key Takeaway: Proactive disclosures and evidence alignment help preempt regulatory risk.

What is a compliance-first framework for AI presentations?

A framework that (a) defines claims, (b) maps each claim to evidence, (c) adds lightweight disclosures about data provenance and limitations, (d) uses cautious language, and (e) includes an evidence appendix for due diligence. Key Takeaway: A structured, evidence-backed framework reduces AI-washing risk while preserving persuasive power.

How can I show data provenance and model risk in decks?

Include a dedicated slide or appendix with: data sources, sampling methods, data quality indicators, model version, training data scope, validation results, and known risks or failure modes. Offer a simple explanation of how you mitigate these risks in production. Key Takeaway: Provenance and risk visibility boost credibility and investor confidence.

How should I handle limitations and edge cases in demos?

Acknowledge limitations explicitly, describe scenarios where the AI may underperform, and outline remediation steps or fallback processes. Avoid implying universal accuracy; present contingency plans and monitoring strategies. Key Takeaway: Edge-case handling signals maturity and governance.

What are best practices for third-party validation in investor decks?

Incorporate independent test results, external audits, or third‑party certifications where feasible. If external validation isn’t available, transparently describe internal validation methods and sample sizes, and prioritize longer-term verification plans. Key Takeaway: Third‑party validation (or transparent internal validation) strengthens trust.

How can startups structure data in a way that preserves credibility without killing momentum?

Present data in digestible formats (charts, ranges, summaries) and avoid overloading with raw metrics. Use storytelling to connect validation results to customer outcomes, and keep a running log of updates to evidence as you iterate. Key Takeaway: Credible decks maintain momentum by balancing data clarity with clear, evolving validation narratives.

Related topics you may want to explore later for internal linking: AI governance, model risk management, data provenance, disclosure templates, investor‑deck compliance, regulatory guidelines for AI marketing, third‑party validation in AI, pilot-to-production evidence flows.

If you’d like, I can keep a rolling watch across Reddit, Quora, LinkedIn, X, and niche forums and send a daily topic that cites a specific post from the last 24–48 hours. Quick note on today’s scan: fresh compliance discussions continue to push teams toward evidence-based AI claims, with a growing emphasis on transparent disclosures and controlled demonstrations to avoid AI-washing in startup decks. The trend is clear: credible, compliance-first storytelling wins.

Next steps you can take today:

- Create a one-page evidence sheet template for your next deck, listing each AI claim, evidence source, sample size, date, and limitations.

- Draft a lightweight disclosure slide that covers data provenance, model version, and known risks, and reserve a time in your slide review for counsel input.

- Build an internal checklist: for every claim, is there a verifiable source? Is there a caveat visible to the audience? Is the audience likely to scrutinize the source?

Key Takeaway: The practical guardrails—evidence mapping, disclosures, and a simple validation appendix—transform AI-washing risks into a strength, strengthening both trust and clarity in your demonstrations.