Explore how Nvidia AI arms race reshapes policy, supply chains, and hyperscalers; discover why Nvidia sits at AI compute's center and what it means.

Quick Answer

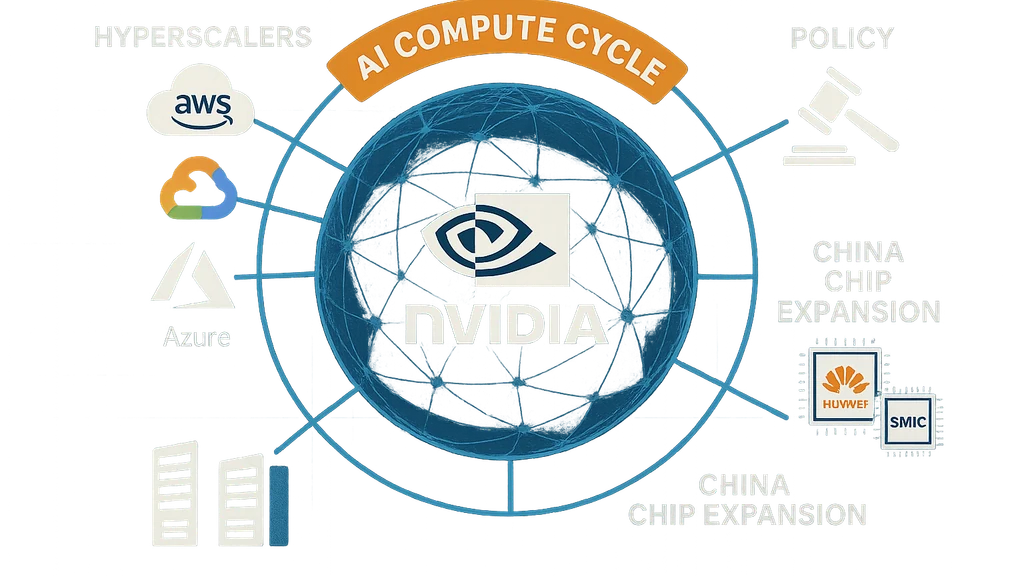

Nvidia AI arms race is shaping today’s global tech narrative. Nvidia’s latest guidance points to roughly $54B in Q3 revenue, underscoring blistering demand from hyperscalers for AI infrastructure. The U.S. signals a strategic stake approach rather than buying Nvidia, keeping policy focused on industrial tech leverage, while China accelerates its own AI‑chip push with Huawei‑linked facilities and expanded SMIC capacity. Cloud providers continue to lock in tens of thousands of Nvidia accelerators, helping sustain the AI compute cycle even amid export‑control headwinds. Takeaway: Nvidia sits at the heart of the AI arms race, with policy, competition, and supply chains all in play.

Key Takeaway: Nvidia is the center of gravity for the AI compute cycle, with global policy and geopolitics amplifying the stakes.

Complete Guide to Nvidia AI arms race

The current AI hardware landscape reads like a global chessboard where chips, policy, and capex decisions move in near-synchrony. Nvidia’s guidance anchors the story: a multi‑billion‑dollar quarterly run rate, persistent hyperscaler demand, and a set of geopolitical headwinds that could rearrange supply chains and vendor loyalties over the coming quarters. My takeaway, as someone who’s learned that growth often comes after difficulty, is that we’re watching more than a company’s growth curve—we’re watching a multi‑country approach to who controls AI infrastructure.

Nvidia AI arms race: Q3 revenue forecast and what it signals

- Nvidia’s Q3 revenue forecast of about $54 billion signals that AI demand for compute infrastructure remains robust. The news arrives even as investors weigh growth rate expectations and the mix of revenue streams within a broader AI platform strategy. For anyone following the AI hardware market, this is a reminder that GPUs and other accelerators remain the backbone of practical AI deployment in data centers.

- The momentum is driven by hyperscalers expanding AI workloads, from training ever larger models to running real‑time inference across sprawling data ecosystems. This stickiness matters: it isn’t a one‑quarter surge; it’s a multi‑quarter cadence in which Nvidia’s share of AI compute demand stays significant.

- The broader takeaway is that Q3 guidance reinforces the notion that the AI compute cycle—where chipmakers supply rapid, scalable accelerators to cloud giants—has become a structural trend in enterprise technology spending. The implications extend to suppliers, integrators, and the downstream developers who rely on hardware to unlock new AI capabilities. Key Takeaway: Nvidia’s Q3 guidance cements AI hardware demand as a durable, multi‑quarter wave, reinforcing Nvidia’s central role in the AI arms race.

U.S. stance on Nvidia stake: policy signals without a purchase

- The U.S. is weighing stakes in strategic sectors as part of a broader industrial policy, but recent commentary indicates Washington is not considering buying a stake in Nvidia itself. The emphasis is on policy leverage—protecting national security interests and safeguarding critical technology—while keeping open the option to strategic stakes in other industries.

- This stance reflects a shift toward policy tools that guide capital deployment and supply chains rather than unilateral equity investments in single firms. For investors and industry, the signal is that chip‑ and AI‑related assets will be scrutinized for national‑interest considerations, with potential spillovers into financing structures and export controls.

- The net effect is a policy environment that seeks to balance advancement in AI with national security concerns, potentially altering partnership strategies, supplier diversification, and how funding flows into AI hardware ecosystems. Key Takeaway: U.S. policy levers are increasingly strategic—watch how they shape investments, collaborations, and export controls across the AI hardware ecosystem.

China’s AI chip push: domestic capacity and geopolitical momentum

- China is accelerating plans to reduce reliance on Nvidia by tripling domestic AI‑chip output via new Huawei‑linked facilities and expanded SMIC capacity. This push reflects a dual motive: to bolster domestic AI workloads and to diversify supply away from a single supplier model that has intensified export‑control pressure.

- Huawei’s initiatives and SMIC’s expansion add a parallel track to the AI hardware race—one that emphasizes self‑reliance in chipmaking, domestic capacity growth, and integrated AI software‑hardware stacks. It also introduces new dynamics for global supply chains, including potential shifts in where AI compute happens (domestically vs. internationally).

- The China narrative matters for long‑term competitiveness in AI, particularly as global tech policy converges on export controls and investment restrictions intended to curb access to cutting‑edge AI accelerators by certain regions. Key Takeaway: China’s domestic AI chip push adds substantial non‑Nvidia capacity into the global AI hardware equation, intensifying competition and supply‑chain diversification.

Cloud providers and Nvidia accelerators: the demand anchor

- Cloud providers are locking in tens of thousands of Nvidia accelerators, underscoring that the AI compute cycle remains a capex‑heavy, multi‑year infrastructure investment cycle. This persistent demand supports a steady revenue tail for Nvidia but also places emphasis on supply chain resilience and pricing dynamics.

- For data‑center operators, the implication is straightforward: AI workloads, from large‑scale training to real‑time inference, require sustained access to GPU accelerators. As a result, data‑center design, cooling, power efficiency, and ecosystem tooling become as important as the chips themselves.

- The ongoing procurement trend helps explain why export‑control considerations matter: the ability of global customers to secure accelerators may hinge on policy timing and licensing regimes, influencing capex plans across hyperscalers and enterprises. Key Takeaway: The cloud‑infrastructure demand for Nvidia accelerators anchors the AI arms race, shaping capex, supply chains, and regional competitiveness.

Export controls, policy headwinds, and the broader risk landscape

- Export controls remain a salient factor in Nvidia’s business environment, particularly as governments reassess tech transfers to restricted regions. While the immediate policy moves emphasize safeguarding critical technologies, they also introduce uncertainty for suppliers, manufacturers, and customers that depend on stable cross‑border supply chains.

- The risk landscape extends beyond Nvidia to include related suppliers, customers, and even downstream developers who rely on stable access to accelerators for model training and inference workloads. Strategic stockpiles, alternative chipmakers, and regional manufacturing capacity become part of the risk mitigation conversation.

- In practice, export controls can tilt procurement strategies toward diversified suppliers, on‑shoring initiatives, and accelerated hardware‑adjacent software optimization to maximize efficiency with available accelerators. Key Takeaway: Export controls add global policy friction to the AI arms race, encouraging diversification, resilience, and alternative pathways to AI compute.

The big picture: why Nvidia sits at the center of the AI compute cycle

- Nvidia’s hardware, software ecosystems, and developer tools have entrenched the company as a critical node in AI workloads. This centrality means that any shifts in policy, competitive pressure from China, or changes in hyperscaler procurement can ripple across the entire AI hardware and software supply chain.

- The AI compute cycle hinges on the interplay of demand (hyperscalers and enterprise buyers), supply (accelerator chips and manufacturing capacity), and policy (export controls, strategic investments). Nvidia’s guidance provides a lens into how durable this cycle might be, even as external forces push for diversification and resilience.

- For individuals watching the next computing epoch, this is a reminder that the next period of compute capability will be shaped as much by government policy and industrial strategy as by pure technology breakthroughs. It’s a multi‑country race where hardware, policy, and capital all matter. Key Takeaway: Nvidia is not just selling GPUs; it’s shaping an entire economic and policy‑driven cycle around AI compute.

Related topics for internal linking (4-6 topics)

- AI compute cycle

- Nvidia accelerators and data-center GPUs

- Cloud providers and hyperscalers

- Export controls and semiconductor policy

- Huawei and SMIC AI chip expansion

- AI hardware market competition

Why This Matters In the last few months, the AI hardware story has shifted from “one company’s growth” to a geopolitical and policy‑driven tug of war. Nvidia’s strong Q3 guidance keeps the company at the center of new compute cycles, while the U.S. policy posture signals that technology leadership remains a national strategic priority rather than a purely private ambition. At the same time, China’s push to triple domestic AI‑chip output introduces a credible challenge to Nvidia’s dominance in the global AI hardware stack, complicating supply chains and potentially reshaping who sells what and where AI workloads run.

Recent developments illustrate a broader trend toward multi‑polar AI infrastructure:

- Hyperscalers continue to spend heavily on AI infrastructure, ensuring a robust demand environment for accelerators even as macro concerns rise.

- U.S. policy signals emphasize strategic engagement with tech sectors, creating a framework in which investments, licensing, and stakes in critical industries influence corporate strategy and capital flows.

- China’s domestic chip push accelerates the diversification of AI hardware sources, potentially accelerating regional ecosystems around Huawei, SMIC, and allied software stacks. Key Takeaway: The AI arms race is evolving into a global, policy‑aware competition that will shape tech investment, supply chains, and regional strengths in AI compute for years to come.

People Also Ask

What does Nvidia's Q3 revenue forecast mean for AI demand?

Nvidia’s Q3 revenue forecast signals sustained, even expanding, AI demand across hyperscalers and enterprise customers. A roughly $54B quarterly run rate implies continued investments in AI training capacity, model deployment at scale, and real‑time inference across data centers. The implication for developers and operators is straightforward: more access to high‑powered accelerators, more pipelines for AI workloads, and a longer horizon of investment in AI infrastructure. The positive signal also invites questions about profitability mix, supply chain constraints, and whether growth can remain as fast in the face of geopolitical headwinds. Key Takeaway: The Q3 forecast reinforces the durability of AI infrastructure demand and Nvidia’s central role in that cycle.

Is the US considering buying a stake in Nvidia?

The current policy posture emphasizes strategic leverage rather than a direct equity stake in Nvidia. Officials have signaled openness to stakes in other critical industries, while making clear that a government purchase of Nvidia is not on the table. This approach aims to preserve competitive dynamics, protect national security, and maintain a diversified approach to funding AI deployment across sectors. For investors, it suggests a policy environment where strategic considerations could influence capital flows and partnerships without distorting market competition. Key Takeaway: The U.S. stance is about strategic influence, not government ownership, creating a nuanced policy backdrop for Nvidia and the broader AI hardware ecosystem.

How is China boosting its AI chip production?

China’s efforts leverage Huawei‑linked facilities and expanded SMIC capacity to triple domestic AI‑chip output. This push seeks to reduce reliance on outside suppliers and cultivate a domestic AI hardware stack that can support homegrown AI software and services. The move compounds global competition in AI accelerators and chips, impacting pricing dynamics, supplier choices, and potential licensing considerations for global customers seeking cross‑border AI compute access. Key Takeaway: China’s domestic chip push broadens the competitive field and affects global AI hardware sourcing and policy calculations.

Which cloud providers are locking in Nvidia accelerators?

Large cloud providers are locking in tens of thousands of Nvidia accelerators to support AI training, inference, and mixed workloads. This demand anchors Nvidia’s revenue trajectory and underpins a resilient ecosystem around CUDA tooling, software libraries, and developer ecosystems. It also heightens the importance of supply chain reliability, licensing regimes, and hardware efficiency in data centers. Key Takeaway: Cloud demand for Nvidia accelerators sustains a long, durable AI compute cycle with implications for data centers and software ecosystems.

What impact do export controls have on Nvidia?

Export controls influence Nvidia’s ability to sell to certain customers and to operate in specific markets. They introduce regulatory risk and potential licensing frictions that can affect pricing, timing, and product strategy. The broader effect is a push toward diversified supplier networks and on‑shoring considerations, as governments weigh the balance between promoting AI progress and national security interests. Key Takeaway: Export controls inject policy risk into the AI arms race, prompting diversification and strategic planning across ecosystems.

Why is Nvidia central to the AI compute cycle?

Nvidia’s accelerators, software platforms, and developer ecosystems have become the de facto standard for powering AI workloads in data centers. This centrality means that any shift in supply, policy, or demand reverberates across the AI hardware market, from chipmakers to hyperscale operators and software builders. The AI compute cycle hinges on durable access to accelerators, efficient data centers, and a thriving ecosystem that supports AI model development and deployment. Key Takeaway: Nvidia’s tech stack and market position make it a linchpin of the AI compute cycle, amplifying the impact of policy and geopolitical dynamics.

How might export controls affect future Nvidia pricing?

Export controls can constrain supply to certain regions or customers, potentially influencing pricing, inventory strategies, and licensing terms. If supply becomes more restricted for strategic reasons, Nvidia and its distributors could see tighter market conditions in restricted markets while other regions absorb more demand. The long‑term effect is a more segmented pricing landscape and increased emphasis on regional partnerships and supply chain resilience. Key Takeaway: Export controls could reshape pricing and regional strategies, driving greater emphasis on resilience and diversification.

What role do hyperscalers play in Nvidia’s AI arms race?

Hyperscalers are the primary engines of sustained demand for Nvidia accelerators. Their AI workloads require scalable, high‑throughput compute, and their procurement decisions set the pace for supplier capacity, pricing, and product roadmaps. The health of these customers often drives downstream ecosystem investments, from data‑center design to software optimization and chip‑level innovations. Key Takeaway: Hyperscalers fuel the AI arms race, underpinning Nvidia’s revenue and shaping the broader hardware ecosystem.

How does Huawei‑SMIC competition affect the global AI landscape?

Huawei’s AI chip initiatives and SMIC’s production capacity expansions introduce a significant competitive dynamic outside Nvidia. This development diversifies supply sources, risks, and potential collaboration opportunities across regions. It also influences licensing, export controls, and global data‑center strategy, as companies weigh where AI workloads will run and which suppliers will power them. Key Takeaway: Huawei‑SMIC activity adds a critical alternative thread to the AI hardware narrative, potentially reshaping global supply chains.

What this all means for the near future

- The AI arms race remains a multi‑country, policy‑driven competition that reaches far beyond quarterly results. Nvidia’s strong revenue guidance keeps the company at the core of AI compute, while policy shifts and China’s chip push could reallocate growth opportunities and alter supplier dynamics in ways that ripple through the market for years.

- For communities and individuals—especially in smaller towns and emerging economies—the immediate implication is more attention on AI infrastructure jobs, training pipelines, and opportunities to participate in the AI era through software, hardware, and services that ride the wave of this compute cycle.

- The medium‑term narrative remains that Nvidia and its peers sit at the heart of the next compute cycle, with governments and industry players shaping the rules of engagement as much as the technology itself.

Key Takeaway: The near future in AI hardware is a convergence of company strategy, policy decisions, and national priorities—where Nvidia sits at the center of a broader, global competition over AI compute.

Next Steps

- Track Nvidia’s quarterly reports and related commentary from policy makers and industry analysts to gauge how the AI arms race evolves.

- Monitor Huawei‑SMIC developments and export control updates to anticipate shifts in the AI hardware supply chain.

- Explore how hyperscalers adjust capex plans in response to policy changes and geopolitical developments, and how that affects accelerator pricing and availability.

- Consider how researchers and developers can prepare for a multi‑vendor, policy‑constrained AI compute environment by investing in software optimization, cross‑hardware tooling, and robust deployment strategies.

Final takeaway The Nvidia AI arms race isn’t a single company story; it’s a layered, global event that ties together quarterly revenue trajectories, policy ambitions, and strategic moves by major tech players. As the U.S. weighs stakes and China accelerates domestic chip production, the next chapters will define where AI compute happens, who builds it, and how fast the world can translate AI breakthroughs into real, scalable applications.

Related topics for internal linking

- AI compute cycle

- Nvidia accelerators and data-center GPUs

- Cloud providers and hyperscalers

- Export controls and semiconductor policy

- Huawei and SMIC AI chip expansion

- AI hardware market competition

Note: The narrative above draws on recent media reporting about Nvidia’s Q3 guidance, U.S. policy discussions around stakes and industrial policy, and China’s AI chip expansion plans with Huawei and SMIC. It’s written to reflect how these evolving dynamics influence the global AI arms race and the broader markets for AI hardware.