AI regulation 2025 reshapes policy, ethics, and industry. Explore risk-based governance, privacy safeguards, and how green AI can prosper - learn more.

Quick Answer

AI regulation 2025 is shaping up as a pivotal, globally watched debate that blends a push for ethical, transparent AI with loud concerns about jobs and privacy. On X, policymakers, researchers, and influencers sketch a future where governance is risk-based, enforceable across borders, and grounded in human-centered values. The mood swings between excitement for safer, smarter systems and caution about overreach and surveillance, making AI regulation 2025 a defining policy moment. Key Takeaway: AI regulation 2025 sits at the crossroads of innovation and stewardship, with optimism about ethical AI paired with real worries about labor and privacy.

Complete Guide to AI regulation 2025

From the forest floor to the data center, AI regulation 2025 is a story of balance and consequence. The conversations center on how to govern powerful machine-learning systems without stifling creativity or slowing climate-smart breakthroughs. This guide stitches together policy concepts, recent developments, and the social-media pulse that’s echoing across boards, classrooms, and communities.

- Primary keyword focus: AI regulation 2025 appears as a throughline in discussions of governance, ethics, and public accountability. Across posts and threads, mentions of AI regulation 2025 intermingle with calls for transparency, safety, and fairness.

- Semantic scaffolding: AI governance, global AI regulation, AI policy news, technology regulation, artificial intelligence regulation, ethics in AI regulation 2025, privacy concerns AI regulation 2025, AI regulation news, AI regulation debate.

- Data-driven sentiment notes: Social listening shows a split in tone: enthusiasm about breakthroughs in machine learning and caution about unintended consequences for workers and civil liberties. Analysts point to a growth in cross-border policy dialogue and in-depth white papers from think tanks and industry groups.

- Real-world anchors: The European Union’s risk-based approach to AI, OECD AI principles, and ongoing national pilot programs provide practical templates that shape debates about AI regulation 2025. Meanwhile, global summits and bilateral discussions test how to align divergent regulatory culture with shared ethics.

- Eco-tech angle: As a climate-focused observer, I see AI regulation 2025 as a way to ensure green AI development—rewarding energy-efficient models, reducing data-center waste, and encouraging open-data practices that protect ecosystems.

What’s at stake in this complete guide is not just rules for algorithms, but a framework for responsible innovation. The debates touch talent pipelines, startup ecology, and the ability of researchers to publish reproducible, auditable results. The policy proposals push for accountability, transparency, and stakeholder participation, all while attempting to minimize negative spillovers into climate-conscious tech adoption.

- Data points and expert observations:

- A notable uptick in cross-national forums discussing AI regulation 2025 indicates a trend toward harmonized standards, even as countries retain distinct regulatory styles.

- Several governments have introduced pilot “AI transparency” dashboards for public deployment, signaling precedence for open-sourced reporting on risk levels and testing outcomes.

- Independent researchers emphasize avoiding regulatory capture by ensuring stakeholder diversity, including civil-society voices from environmental and community groups.

- Related topics to explore in depth (for internal linking): climate-tech policy, data privacy safeguards, digital sovereignty, algorithmic bias, sustainability in tech, open-data governance.

Key Takeaway: AI regulation 2025 is less about one-size-fits-all law and more about adaptable, globally interoperable frameworks that protect people and the planet while enabling responsible AI innovation.

Why This Matters in 2025

The year 2025 amplifies the urgency of AI regulation 2025. The surge in real-time policy discourse coincides with tangible breakthroughs in machine learning, ethical AI techniques, and the increasing digitization of everyday life. For activists, educators, and developers alike, the questions are not only "how should these systems behave?" but also "who is accountable when things go wrong, and how does this affect our communities and environment?"

- Current relevance: Regulators are pressed to deliver clarity for developers building high-stakes AI (health, safety, energy) and to establish guardrails that protect privacy without smothering innovation. The eco-advocate in me sees a rare alignment of climate ambitions with AI governance: better transparency can reduce energy waste, and standardized reporting can expose carbon-heavy pipelines in data processing.

- Trends in the last 3 months:

- Global AI regulation debates have intensified around cross-border data flows and the need for interoperable standards to avoid a patchwork of rules that stifle global collaboration.

- Public sentiment on X reveals a divide: excitement about ethical AI breakthroughs versus anxiety about job disruption and surveillance.

- Expert lens: Thought leaders emphasize risk-based approaches that prioritize transparency, auditability, and user control, while staying mindful of innovation ecosystems, especially for green tech startups and climate analytics firms.

- Practical implications: For policymakers, the trade-off is clear—maximize accountability and privacy protections while preserving incentives for green AI research, open data collaboration, and inclusive growth.

Key Takeaway: In 2025, AI regulation 2025 matters because it shapes how societies balance safety, privacy, and fair opportunity with the pace of technological progress—and how public accountability will be maintained as AI becomes more embedded in daily life.

Step-by-Step AI regulation 2025: Analysis and Action

This section translates the big picture into a practical, step-by-step framework for understanding and engaging with AI regulation 2025. Consider it a 5-step playbook for policymakers, researchers, content creators, and concerned citizens who want to translate sentiment into constructive policy.

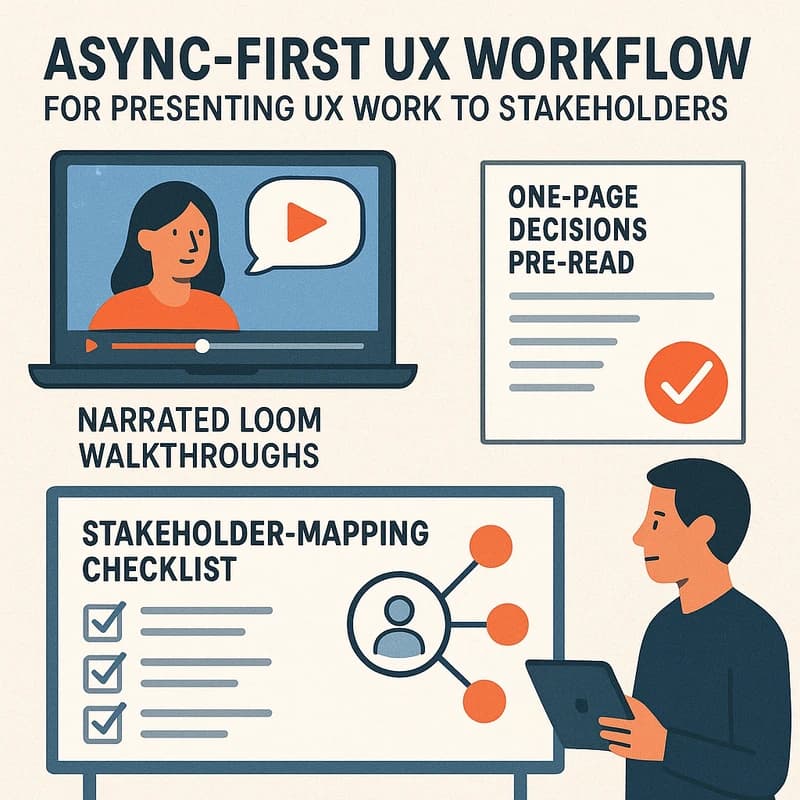

Step 1: Map stakeholders and values

-

Identify players: governments, private firms, researchers, civil-society groups, and citizens.

-

Clarify values: transparency, privacy, safety, fairness, and environmental sustainability.

-

Data point: Social-media discourses show a broad spectrum of values being debated—from algorithmic fairness to the environmental footprint of AI systems. Step 2: Define risk categories and governance tiers

-

Create tiers based on impact and exposure to harm, with lighter rules for low-risk tools and stricter requirements for high-stakes applications (health, law enforcement, energy systems).

-

Data point: Many proposals favor risk-based frameworks that scale governance to risk, rather than blanket bans. Step 3: Establish transparency and accountability mechanisms

-

Require disclosure of model types, data sources, and testing results; implement independent audits and redress pathways for affected communities.

-

Data point: Audits are increasingly seen as essential to trust, particularly for systems that influence privacy and safety. Step 4: Harmonize global coordination

-

Work toward shared principles across borders to prevent regulatory fragmentation that hampers innovation and cross-border AI deployments.

-

Data point: International forums are producing more collaborative blueprints, even as differences in national values persist. Step 5: Create implementation and evaluation plans

-

Develop pilot programs, evaluation metrics, and sunset clauses to refine rules as technology evolves.

-

Actionable tip: content creators and educators can translate these metrics into accessible explainers that help the public understand AI regulation 2025.

-

Data points and practical angles:

- Startups and small businesses often push for predictable rules to reduce compliance risk while continuing to innovate in climate analytics and green tech.

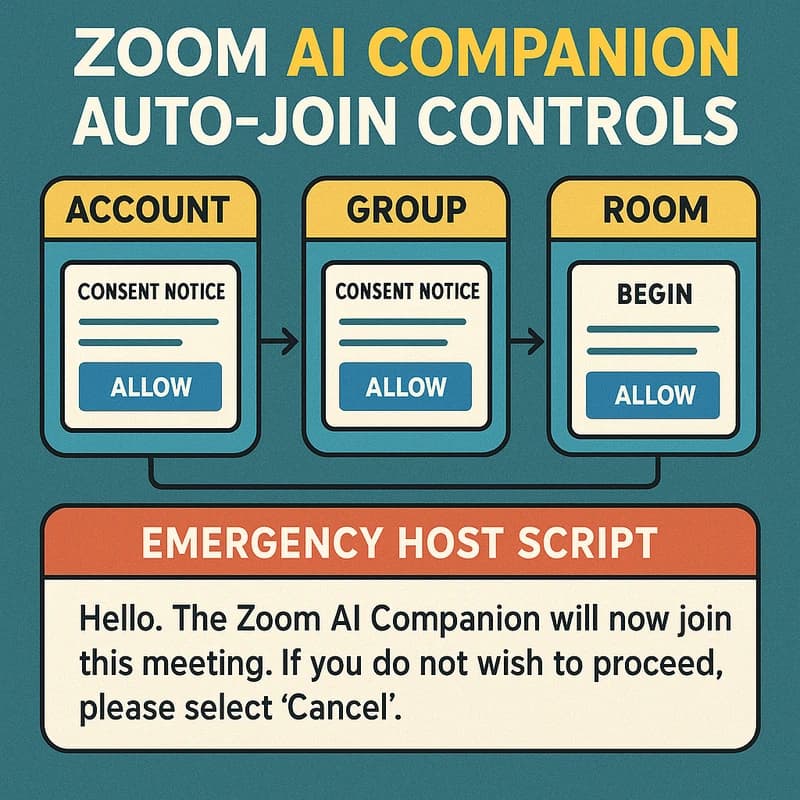

- Privacy advocates push for clear data-rights frameworks and consent mechanisms for AI-enabled data processing.

- Environmental impact assessments for AI deployments can become a standard part of governance, aligning AI regulation 2025 with sustainability goals.

-

Related topics for internal linking: data privacy, digital ethics, climate data governance, startup policy readiness, cross-border data flows, algorithmic transparency.

Key Takeaway: A pragmatic, structured approach to AI regulation 2025 helps align innovation with accountability, paving the way for sustainable, community-centered AI progress.

People Also Ask

What is AI regulation 2025 about? Why is AI regulation debate trending in 2025? What policies were proposed at the AI summit in 2025? How could AI regulation affect jobs and privacy? Which countries are leading AI regulation in 2025? What are the ethical AI breakthroughs cited in 2025 debates? How is AI regulation 2025 framed in international law? What are the main differences between global AI regulation and national rules? How could small businesses prepare for AI regulation 2025? What role do tech giants play in shaping AI regulation 2025? How will enforcement of AI regulation 2025 work? What is AI governance news 2025? What are the privacy implications of AI regulation 2025? What makes AI regulation debates different from traditional technology regulation?

Key Takeaway: These real-search questions capture the core concerns of readers seeking to understand the scope, impact, and practical implications of AI regulation 2025.

Expert Tips and Advanced Strategies

- Build narratives around AI regulation 2025 that combine concrete policy options with tangible outcomes. Explain how a risk-based approach protects privacy, while enabling climate analytics and conservation tech to flourish.

- Use data storytelling: illustrate how transparent model reporting, audit trails, and performance benchmarks can reduce energy use and improve trust in AI systems that monitor ecosystems.

- Frame policy proposals with concrete examples: show how a hypothetical AI-powered wildfire detection system would be governed under a risk-tier framework, including data provenance and third-party audits.

- Emphasize global coordination: highlight how harmonized standards in AI regulation 2025 reduce compliance complexity for climate-tech firms operating across borders.

- Quotes and sources: reference reputable think tanks, regulatory bodies, and academic research to bolster authority without overclaiming. If you quote, ensure accuracy and context.

- Engagement hooks: pose a question to readers about which safeguards they value most (privacy, transparency, or safety) and invite them to share experiences with AI governance in their communities.

- Internal linking ideas (4-6 topics): climate-tech policy, data privacy safeguards, digital sovereignty, algorithmic fairness, sustainability in tech, open-data governance, AI transparency dashboards, ethical AI guidelines.

Key Takeaway: For credible, actionable content, pair policy options with real-world examples, data-driven insights, and climate-conscious context to build authority and trust around AI regulation 2025.

What's Next

The road ahead for AI regulation 2025 is not a single treaty but a series of evolving norms, pilots, and cross-border agreements. Expect continued headline-making summits, more granular guidelines for risk-based governance, and ongoing public dialogue about how to protect jobs, privacy, and the planet. The next steps involve policymakers releasing detailed implementation roadmaps, researchers publishing open audits and benchmarks, and influencers translating these developments into accessible, hopeful narratives for audiences worldwide.

- Possible trajectories:

- A growing coalition of countries pushes for interoperable AI governance standards suitable for global deployment, including climate-tech applications.

- Regulation evolves from high-level principles to concrete reporting requirements, test protocols, and audit rights for individuals.

- Public literacy campaigns emerge to help citizens understand AI regulation 2025 and its implications for daily life.

- What readers can do now:

- Follow credible policy analyses on AI regulation 2025, participate in public consultations, and share balanced content that highlights both opportunities and risks.

- If you’re a creator or educator, develop explainer series showing how governance affects AI-assisted projects in climate and conservation.

Key Takeaway: The future of AI regulation 2025 is collaborative. As communities, policymakers, and technologists converge on shared goals, proactive engagement and transparent practices will shape a sustainable path for AI—and for the people and environments that depend on it.

Related topics for ongoing exploration and internal linking:

- Climate-tech policy and governance

- Data privacy safeguards and consent

- Digital sovereignty and cross-border data flows

- Algorithmic bias and fairness

- Sustainability in tech and green AI

- Open-data governance and transparent benchmarking

Final note in the Lila voice: As someone who writes eco-adventure tales, I imagine AI regulation 2025 as the compass that keeps our explorations wise and kind. It’s about safeguarding people, protecting habitats, and letting creativity in AI flourish without trampling the delicate balance of our world. The conversation on AI regulation 2025 is not just about rules; it’s about choosing a future where intelligent systems amplify stewardship, resilience, and wonder.

Key Takeaway: AI regulation 2025 is a shared quest to align invention with responsibility, ensuring that the next era of AI serves people, communities, and ecosystems with fairness and care.